Creative Computing Major Project Semester 2, 2006

Delayed Extraction...

Program Note:

Delayed Extraction

10'30

Delayed Extraction

10'30

The purpose that this work has evolved to pursue is that of exploring the sonic and musical possibilities offered by a mere handful of guitar tones. With enough experience, it becomes very rewarding to leap into the idea of electronic music performance with restrictions placed on your use of tools. I felt the restrictions did not adversely affect my creativity, but opened my eyes to concepts I have overlooked in the past.

My initial approach was to use large chunks of pre prepared audio as a base, and ‘improvise’ with what ever I could over the top. Realising soon enough that this was keeping me locked into ‘studio composition’ mode, I opted for a more abstract approach. My point of departure then became a concept I have described as ‘abstract guitar’. This involves capturing a few select notes from the guitar with an audio recording device, and quickly splicing and manipulating them with editing and sequencing software (Ableton Live, F-scape, and Plogue Bidule) to build a musical result as quickly as possible. It is an exciting and often unpredictable way of composing that yields many surprising results.

The presence of the conventional drum kit was an experiment that became too important not to be included. Even if I am writing abstract music, I still crave a definable rhythm section of some description. In this case, the drum kit is an inbuilt programmable feature of the application ‘Ableton Live’, and a fine sounding one at that…

Analysis:

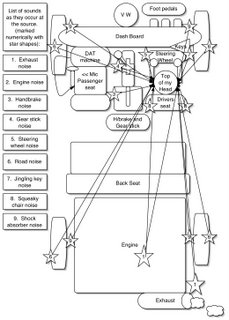

My approach to this project quickly became focused on reducing the time lag between realising certain components. The Kawai K5000 has been instrumental in this regard. The first task for the Kawai was to ease the pain of recording audio samples from my guitar. By mapping the incoming MIDI data via a ‘CC to parameters’ device, I was able to give myself a couple of on off controls for the file recorders. It’s not as convenient as having a button to press, as the K5000 only has rotating knobs, but it beats using the mouse. The real convenience of this is that you don’t even need to open up the file recorders for the purpose (although it is advisable for visual confirmation of operation). It’s as simple as turning the knob hard right to start recording, and hard left to stop. The newly created audio files conveniently turn up in Live’s file manager, presuming you have set your recording path correctly.

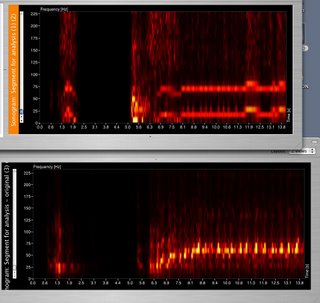

With the audio capture functionality sorted out, it was time to look at audio file manipulation, for this was to be the main point of interest in the piece. It quickly became apparent that the ‘type’ of audio captured would severely influence its potential for real time manipulation. The more complex I tried to make the files (such as trying to record predetermined guitar riffs without following a metronome), the more difficult they became to work with in real time. A change of tactic was needed, so I decided to simplify the input and just play single notes, which would then be chopped up in F-scape and sequenced as small segments in Live. The results were instantaneously more successful, so I stayed on this path.

There was still the issue of time lag however, as recording a note, dumping it to F-scape and splicing, and then loading the Impulse playback devices in Live was still blowing the timeframe of a piece out to around thirty minutes from start finish. The only solution for this problem that my limited experience with this type of performance could surmise was to create a detailed and straightforward score to follow. This way I would not be wasting time between processes dwelling on what needed to be (or what could be) done next. Taking advantage of OSX’s handy screenshot capability, it was simple to provide a relevant visual cue for what was to come next. If it were something to do with the Impulse in Live for instance, then my score would show a picture of the object, along with a short written direction to clarify the required procedure. Some parts of the score contained traditional notation, to provide a reference point for either the input of guitar notes, or the way that they may be sequenced. Although these could be followed to the note if desired, they are only intended as a rough guide, to negate the need for sophisticated conventional musical thought, which would hamper the process.

The downloads listed below contain the complete performance in Mp3 format, along with the relevant program files if you feel the urge to recreate the masterpeice for yourself...

Delayed Extraction (14.4 Mb Mp3)

Performance Live Patch(0.097 Mb Ableton Live File)

Performance Bidule Patch(0.243 Mb .bidule)

Performance Reason Patch(0.034 Mb .rsb)

Performance Cuebase Score File(0.060 Mb .cpr)

Performance Complete Score(2.6 Mb .doc)