Audio Arts - Minor Project Semester 2, 2006

Driving the Type 3 Volkswagen:

Aint she the purrtiest...

Aint she the purrtiest...

Process information:

My initial objective was to create a realistic representation of the engine sound from the car. At first I stumbled across a potentially suitable sound from using Plogue Bidule’s FFT and spectral to MIDI devices, which were converting my raw guitar signal and sending its interpreted MIDI data to Reason. Using a bass synthesiser patch in Reason produced some very VW like results, as the random incoming MIDI data caused it to cough and splutter notes of random modulating low frequency and duration. Listening to these results in conjunction with the original sound recording of the car quickly rendered them too unpredictable for practical use as engine noise however.

Instead I opted for the technique of white noise modulation in Plogue Bidule as this offered a great deal of control over the sound and variable aspects of it’s character. I used a mixture of real time control over the frequency variables with the mouse, simply recording my efforts and splicing out the useful components, and MIDI control. The MIDI control set-up was tricky at first, but establishing a connection between Plogue Bidule and Cuebase proved to be well worth the effort. Using a MIDI to value device receiving modulation data from Cuebase, and a parameter modulator in bidule, allowed me to easily map the incoming control data to Bidule’s variable devices. This enabled the use of ‘ramp drawing’ in cuebase to visually represent things that were happening in the recording and in my synthesised sound, namely the revving of the car engine. I only used the automation in this way for the low frequency component of the engine noise, as the white noise modulation had already been appropriately achieved through real time human control.

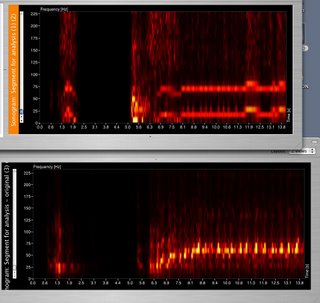

The result of this combination produced some strikingly analogous characteristics with the real world engine sound, as you will see from inspecting the attached sonogram. There is a heavier and more consistent low frequency presence in the 10 to 20 KHz range in the synthesised sound by comparison, but this is of negligible importance as the human hearing range is weak at that level anyway. In the 60 to 75 KHz range is where the real success is evident. The synthesised sound has a stronger and more consistent signal than the original, but this can most likely be attributed to the bass frequency, which is kept low in the mix anyway.

For the more incidental noise in the car I chose to use straight recording and audio manipulation techniques. The emulated sounds came from limited sources such as the zippers on a back pack, paper being scraped and rubbed together, and simple percussive noises achieved by hitting various ‘in-house’ objects in studio 2’s dead room. The files were given names according to the real-world sound I would most likely associate them with for ease of selection in the sequencing process. I specifically recorded a limited set of sounds and vowed to manipulate them as necessary, to emulate the various sonic events inside the car. Tried and tested techniques were used such as splicing, stretching, pitch shifting and delay.

The surround sound mix down in studio one proved a little tricky at first. The presence of audible clicks was the first concern. A quick check of the EMU FAQ revealed that this is an issue that can arise from having ‘multiprocessing’ ticked as an option in the devices / expert menu. Unchecking the relevant box seemed to alleviate the problem. All of the control parameters relating to 5.1 mixing worked pretty much to the letter as my lecture notes stated. Cuebase initially lost touch with the HD device, but a quick switch and switch back of ASIO functions sorted this out.

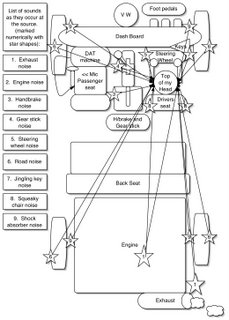

Sonically, I’m happy overall with the final product. I have a small concern with the placement of certain sounds in the surround sound context. In the current set up of studio one, the front left and right speakers are situated more for the purpose of stereo monitoring from the Control 24 surface. It makes mixing in Cuebase a little disorienting when you need to repeatedly make a change at the computer keyboard, and then adjust your position in the room to effectively judge the results. I could have moved the speakers to a more suitable location, but shifting expensive and unstable hardware is something I try to avoid if unnecessary. As a result, I have gone with the on screen representation of sound locations in some cases, relevant to how they appear on my sound event map. I felt this would be a better option than over compensating for a problem that is only relevant to the set up of a particular studio.

Type 3 Synthesis (4.2MB Mp3)

Aint she the purrtiest...

Aint she the purrtiest...Process information:

My initial objective was to create a realistic representation of the engine sound from the car. At first I stumbled across a potentially suitable sound from using Plogue Bidule’s FFT and spectral to MIDI devices, which were converting my raw guitar signal and sending its interpreted MIDI data to Reason. Using a bass synthesiser patch in Reason produced some very VW like results, as the random incoming MIDI data caused it to cough and splutter notes of random modulating low frequency and duration. Listening to these results in conjunction with the original sound recording of the car quickly rendered them too unpredictable for practical use as engine noise however.

Instead I opted for the technique of white noise modulation in Plogue Bidule as this offered a great deal of control over the sound and variable aspects of it’s character. I used a mixture of real time control over the frequency variables with the mouse, simply recording my efforts and splicing out the useful components, and MIDI control. The MIDI control set-up was tricky at first, but establishing a connection between Plogue Bidule and Cuebase proved to be well worth the effort. Using a MIDI to value device receiving modulation data from Cuebase, and a parameter modulator in bidule, allowed me to easily map the incoming control data to Bidule’s variable devices. This enabled the use of ‘ramp drawing’ in cuebase to visually represent things that were happening in the recording and in my synthesised sound, namely the revving of the car engine. I only used the automation in this way for the low frequency component of the engine noise, as the white noise modulation had already been appropriately achieved through real time human control.

The result of this combination produced some strikingly analogous characteristics with the real world engine sound, as you will see from inspecting the attached sonogram. There is a heavier and more consistent low frequency presence in the 10 to 20 KHz range in the synthesised sound by comparison, but this is of negligible importance as the human hearing range is weak at that level anyway. In the 60 to 75 KHz range is where the real success is evident. The synthesised sound has a stronger and more consistent signal than the original, but this can most likely be attributed to the bass frequency, which is kept low in the mix anyway.

The sonogram. Artificial file is on top, Original file is below.

For the more incidental noise in the car I chose to use straight recording and audio manipulation techniques. The emulated sounds came from limited sources such as the zippers on a back pack, paper being scraped and rubbed together, and simple percussive noises achieved by hitting various ‘in-house’ objects in studio 2’s dead room. The files were given names according to the real-world sound I would most likely associate them with for ease of selection in the sequencing process. I specifically recorded a limited set of sounds and vowed to manipulate them as necessary, to emulate the various sonic events inside the car. Tried and tested techniques were used such as splicing, stretching, pitch shifting and delay.

The surround sound mix down in studio one proved a little tricky at first. The presence of audible clicks was the first concern. A quick check of the EMU FAQ revealed that this is an issue that can arise from having ‘multiprocessing’ ticked as an option in the devices / expert menu. Unchecking the relevant box seemed to alleviate the problem. All of the control parameters relating to 5.1 mixing worked pretty much to the letter as my lecture notes stated. Cuebase initially lost touch with the HD device, but a quick switch and switch back of ASIO functions sorted this out.

Sonically, I’m happy overall with the final product. I have a small concern with the placement of certain sounds in the surround sound context. In the current set up of studio one, the front left and right speakers are situated more for the purpose of stereo monitoring from the Control 24 surface. It makes mixing in Cuebase a little disorienting when you need to repeatedly make a change at the computer keyboard, and then adjust your position in the room to effectively judge the results. I could have moved the speakers to a more suitable location, but shifting expensive and unstable hardware is something I try to avoid if unnecessary. As a result, I have gone with the on screen representation of sound locations in some cases, relevant to how they appear on my sound event map. I felt this would be a better option than over compensating for a problem that is only relevant to the set up of a particular studio.

Type 3 Synthesis (4.2MB Mp3)

0 Comments:

Post a Comment

<< Home